Data Contracts: The Key to Scalable Decentralized Data Management?

It's 3 AM. The support team receives a critical alert: the data pipeline feeding the real-time sales dashboard is down. Preliminary analysis reveals that the e-commerce team modified the order data format without notice. A required field was renamed, and now the entire processing chain is paralyzed. This situation, unfortunately too common, illustrates an often-neglected reality: data is not just an asset, it's a product that requires rigorous lifecycle management.

Daily Life Without Data Contracts

Imagine a growing e-commerce company. Several teams work in parallel on different parts of the system:

The e-commerce team manages the sales platform and generates transaction data

The data science team develops recommendation models

The BI team produces reports for management

The marketing team uses customer data for campaigns

On the surface, everything works. But beneath, it's chaos:

Data engineers spend their days fixing broken pipelines because a field changed type or name

Data scientists discover their models are producing erroneous results due to silent changes in input data

The BI team must constantly verify if metrics are still calculated the same way

Meetings are filled with questions like: "Who changed this field?", "Why is the data different today?", "How are we supposed to use this column?"

The Hidden Cost of Missing Contracts

This situation has a real cost, often underestimated:

Business decisions made on incorrect data

Hours lost in debugging and reconciliation

Delayed data projects

Loss of trust in data

Stress and frustration in teams

This situation becomes even more critical in a Data Mesh context, where data responsibility is decentralized to business domains. Take the example of a bank I recently assisted in its Data Mesh transformation. Each domain - credit, savings, insurance - became responsible for its own data. Without data contracts, this decentralization initially amplified the problems: inconsistencies multiplied, traceability became a nightmare, and trust in data eroded.

On average, teams spend 40% of their time managing these coordination and quality issues. It's like building a house where each craftsman would use their own units of measurement, but at the scale of an entire city.

The Emergence of Data Contracts

The Data Mesh transformation represents a fundamental change in how organizations manage their data. In this model, each business domain becomes responsible for its own data, whether it's credit, savings, or insurance data for a bank, or sales, logistics, or marketing data for a retailer. This decentralization promises better agility and greater alignment with business needs.

However, this increased domain autonomy creates new challenges. Without proper structure, coordination problems multiply. Teams can spend up to 40% of their time managing data consistency and quality issues, a hidden but significant cost. Data Contracts emerge as a structured response to these challenges.

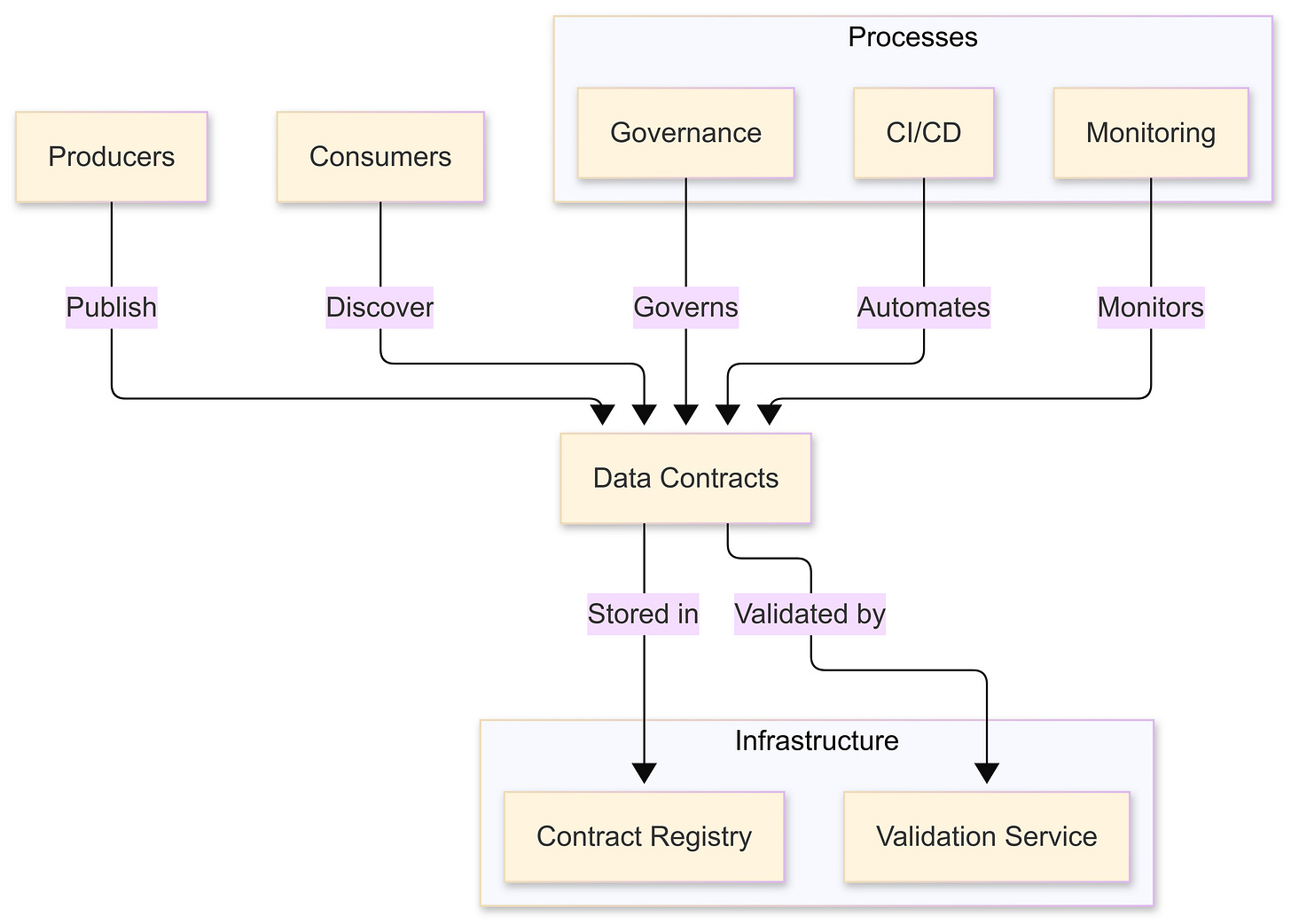

Let's examine the typical architecture of a Data Contracts implementation:

This architecture illustrates the essential components of a Data Contracts system. The contract registry centralizes definitions, while the validation service ensures their compliance. Integration with CI/CD processes enables automation, while monitoring ensures continuous quality. Governance, finally, provides the framework necessary for controlled evolution.

Open Data Contract Standard (ODCS)

Facing these challenges, a standard has emerged: the Open Data Contract Standard (ODCS). This isn't just another technical specification - it's a common language that allows teams to clearly communicate their expectations and commitments regarding data. Here's a concrete example of an ODCS contract for a customer data stream:

apiVersion: v3.0.0

kind: DataContract

id: urn:datacontract:customer:profile

domain: customer-domain

tenant: CustomerDataInc

name: Customer Profile

version: 1.0.0

status: active

description:

purpose: "Manage and provide access to core customer profile information"

usage: "Customer data management and analytics"

limitations: "PII data subject to GDPR and CCPA compliance"

authoritativeDefinitions:

- type: privacy-statement

url: https://company.com/privacy/gdpr.pdf

schema:

- name: CustomerProfile

physicalName: customer_profiles

physicalType: table

description: "Customer profile information"

dataGranularityDescription: "One row per customer"

tags: ["customer", "profile", "pii"]

properties:

- name: customer_id

logicalType: string

physicalType: text

description: "Unique customer identifier"

isNullable: false

isUnique: true

criticalDataElement: true

examples:

- "CUST123456"

- "CUST789012"

- name: email

logicalType: string

physicalType: text

description: "Customer email address"

isNullable: false

criticalDataElement: true

classification: restricted

customProperties:

- property: pii

value: true

examples:

- "john.doe@email.com"

- name: first_name

logicalType: string

physicalType: text

description: "Customer first name"

isNullable: false

classification: restricted

customProperties:

- property: pii

value: true

examples:

- "John"

- name: last_name

logicalType: string

physicalType: text

description: "Customer last name"

isNullable: false

classification: restricted

customProperties:

- property: pii

value: true

examples:

- "Doe"

- name: birth_date

logicalType: date

physicalType: date

description: "Customer birth date"

isNullable: false

classification: restricted

customProperties:

- property: pii

value: true

examples:

- "1980-01-01"

- name: address

logicalType: object

physicalType: object

description: "Customer address"

classification: restricted

customProperties:

- property: pii

value: true

quality:

- rule: nullCheck

description: "Critical fields should not be null"

dimension: completeness

severity: error

businessImpact: operational

- rule: uniqueCheck

description: "Customer ID must be unique"

dimension: uniqueness

severity: error

businessImpact: critical

slaProperties:

- property: latency

value: 1

unit: d

- property: generalAvailability

value: "2023-01-01T00:00:00Z"

- property: retention

value: 5

unit: y

- property: frequency

value: 1

unit: d

- property: timeOfAvailability

value: "09:00-08:00"

driver: regulatory

team:

- username: jsmith

role: Data Product Owner

dateIn: "2023-01-01"

- username: mwilson

role: Data Steward

dateIn: "2023-01-01"

roles:

- role: customer_data_reader

access: read

firstLevelApprovers: Data Steward

- role: customer_data_admin

access: write

firstLevelApprovers: Data Product Owner

secondLevelApprovers: Privacy Officer

support:

- channel: "#customer-data-help"

tool: slack

url: https://company.slack.com/customer-data-help

- channel: customer-data-support

tool: email

url: mailto:customer-data@company.com

servers:

- server: local

type: local

format: parquet

path: ./data/customer_profiles.parquet

- server: prod

type: s3

format: parquet

path: s3://data-lake-prod/customer/profiles/

description: "Production customer profiles data"

servicelevels:

availability:

description: "Profile data availability"

percentage: "99.9%"

measurement: "daily"

privacy:

description: "Privacy compliance"

requirements:

- "GDPR Article 17 - Right to erasure"

- "CCPA Section 1798.105 - Right to deletion"

responseTime: "30 days"

tags:

- customer

- profile

- pii

- gdpr

customProperties:

- property: dataDomain

value: customer

- property: dataClassification

value: restricted

- property: retentionPolicy

value: gdpr_complianceLet's analyze each section of this contract in detail:

The contract header establishes its identity and governance. The

idfield uniquely identifies the contract, while theinfosection provides essential metadata, including contact information for the responsible team.The

serverssection defines where and how the data is stored. In this example, we have a local configuration for development and a production configuration in S3.The

modelssection describes the data structure with a precise definition of each field. Fields containing personal data (PII) are clearly identified, and validation constraints are explicit.The

termsestablish usage conditions, including regulatory compliance aspects and data retention duration expressed in ISO 8601 format.The

servicelevelsdefine measurable commitments on data availability and privacy compliance, with precise response times for requests related to personal rights.

Implementation: From Concepts to Reality

The implementation of data contracts in a data lake context is particularly relevant, especially in a medallion architecture (bronze, silver, gold). Let's take the example of the sales domain, where raw transaction data is progressively refined to feed critical analyses and dashboards.

Where to Start?

In a Data Mesh context, data contract adoption must align with domains' maturity as data producers. I've observed that organizations succeed better when they:

Identify a mature and motivated business domain to pilot the initiative. In retail, the sales domain often plays this role, creating a concrete example for other domains.

Start with a critical data product having multiple consumers. The silver transactions table is perfect: critical data for reporting, multiple analytical consumers, and clear quality needs.

Establish a short feedback loop with consumers. Data scientists analyzing purchase behaviors provide valuable feedback on necessary attributes and their quality constraints.

Progressively automate validations and monitoring, transforming the contract into a living tool rather than static documentation.

Document and share successes to create a snowball effect. When other domains see the reduction in incidents and improvement in analysis reliability, they naturally adopt the approach.

The goal isn't immediate perfection but to establish a new standard of collaboration around data. If you want data contract adoption to succeed, everyone must be involved and respect the format, without which your production deployment will fail.

Conclusion

Data contracts in a data lake aren't just documentation - they become the guardrail ensuring data quality and reliability at each transformation step. By formalizing expectations and responsibilities, they create a trust framework that allows building reliable analyses on quality data.

In the next article, we'll explore how these contracts integrate into a global data governance strategy, emphasizing contract evolution and maintenance over time.